🌟 Introduction

Hello DevOps enthusiasts! Welcome back. Today, we're diving deep into working with Terraform and AWS. We'll cover essential topics AWS topics EC2, S3, DynamoDB, KMS, and VPC. In Terraform we cover state management, validation, provisioners, lifecycle management and minimize issues and increase security practices using SNYK. So, let's get started!

📜 Prerequisites:

Install AWS CLI

AWS Account

Code Editor (I have used VS Code for this deployment)

Code Editor ( Go to extensions and install snyk, then create snyk account)

📚 Steps for Implementation:

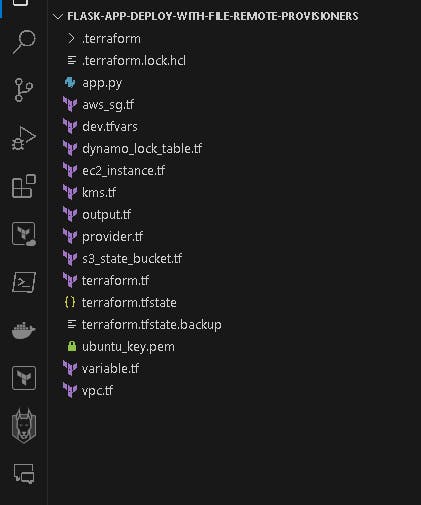

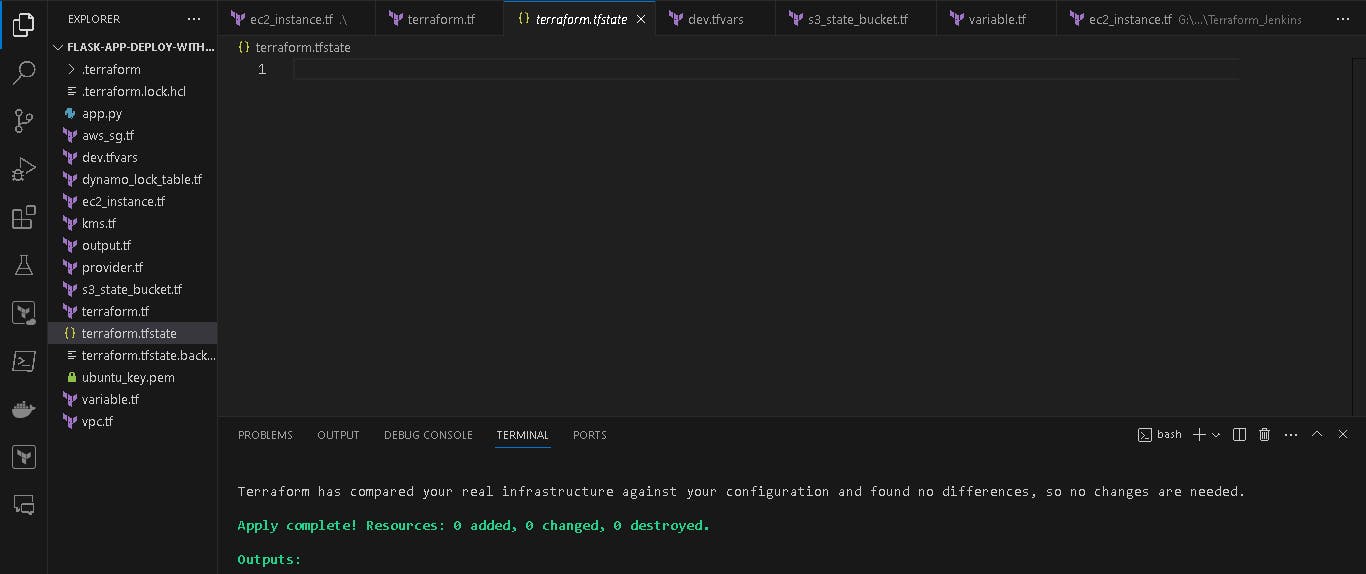

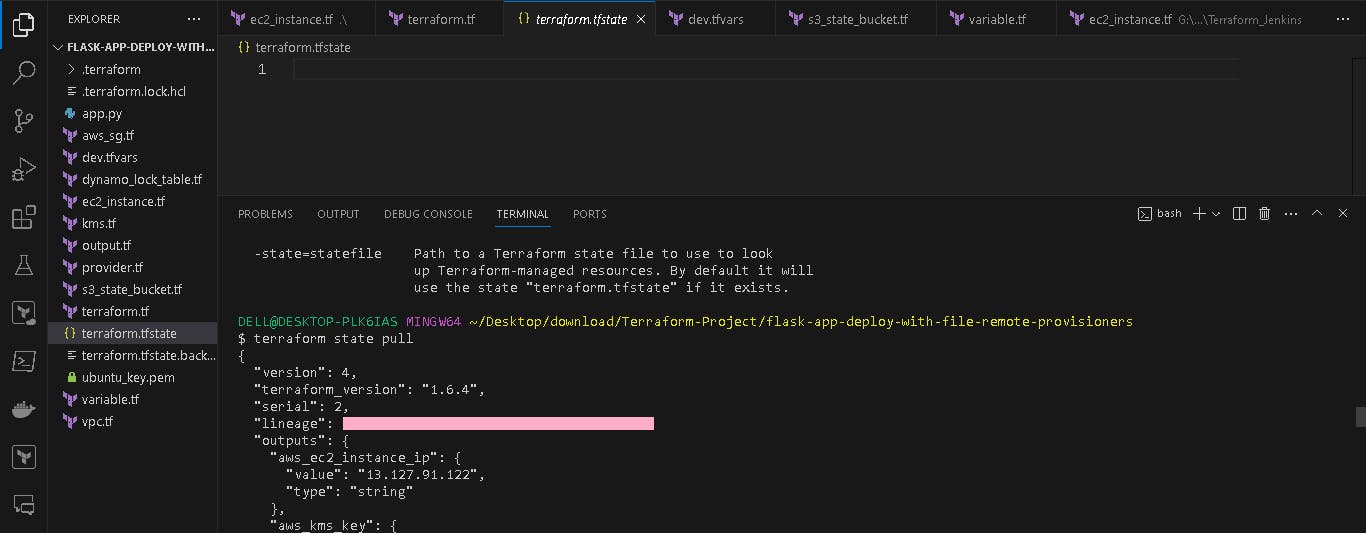

Summary view of the terraform files in VS code:

👨💻 Create AWS Services using Terraform:

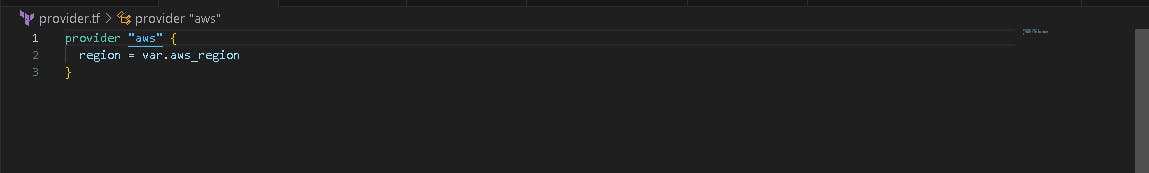

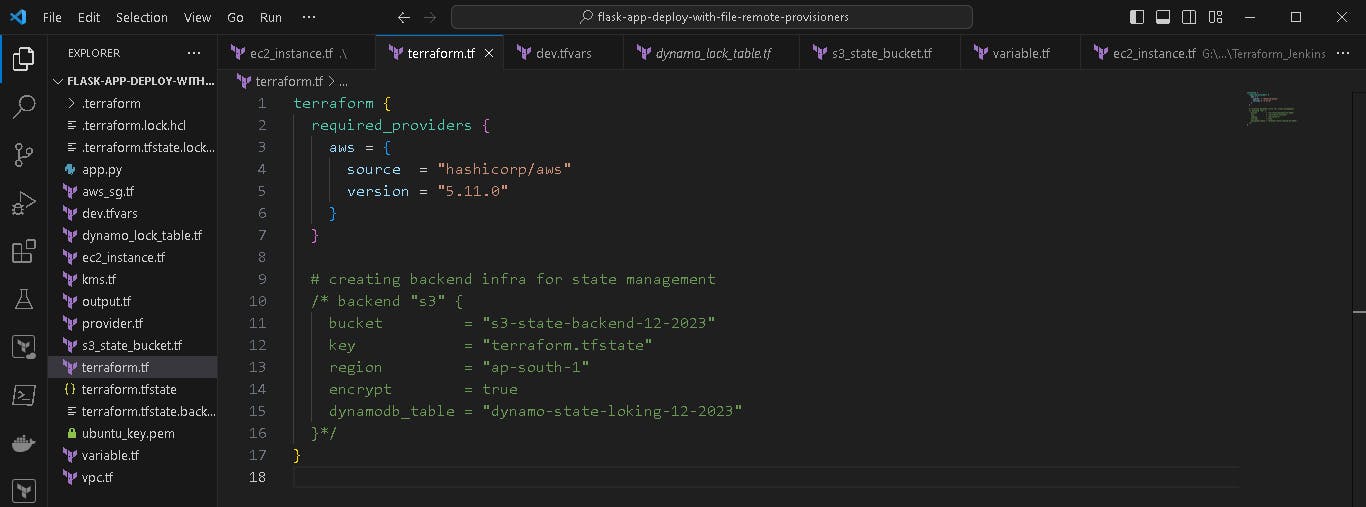

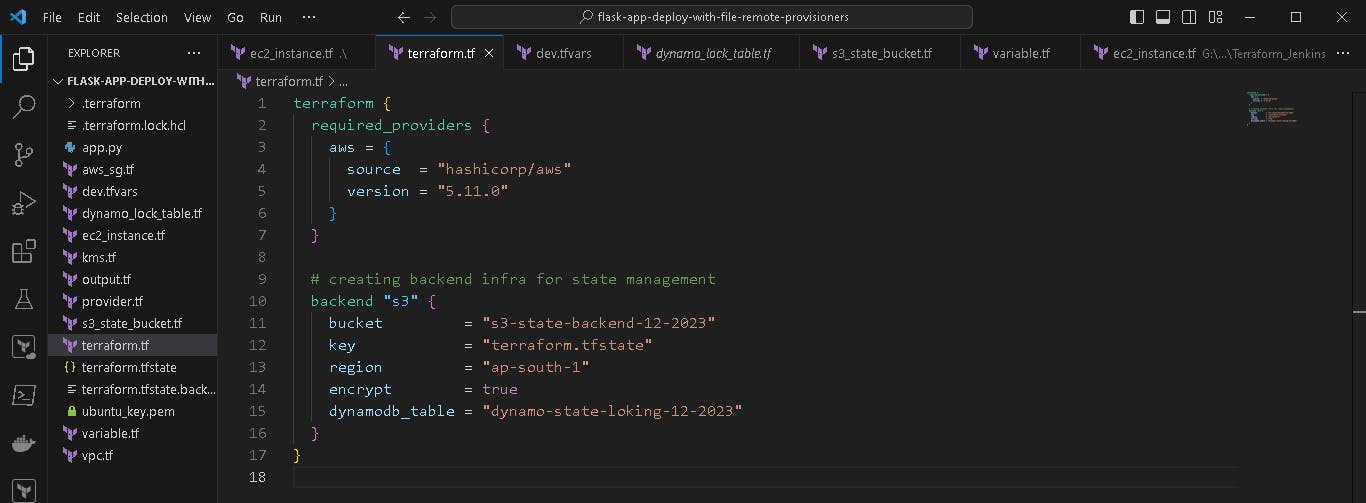

🤖 Create terraform.tf and provider.tf file

We will start with declaring (terraform.tf) and (provider.tf) file.

Then we configure terraform in terraform.tf file.

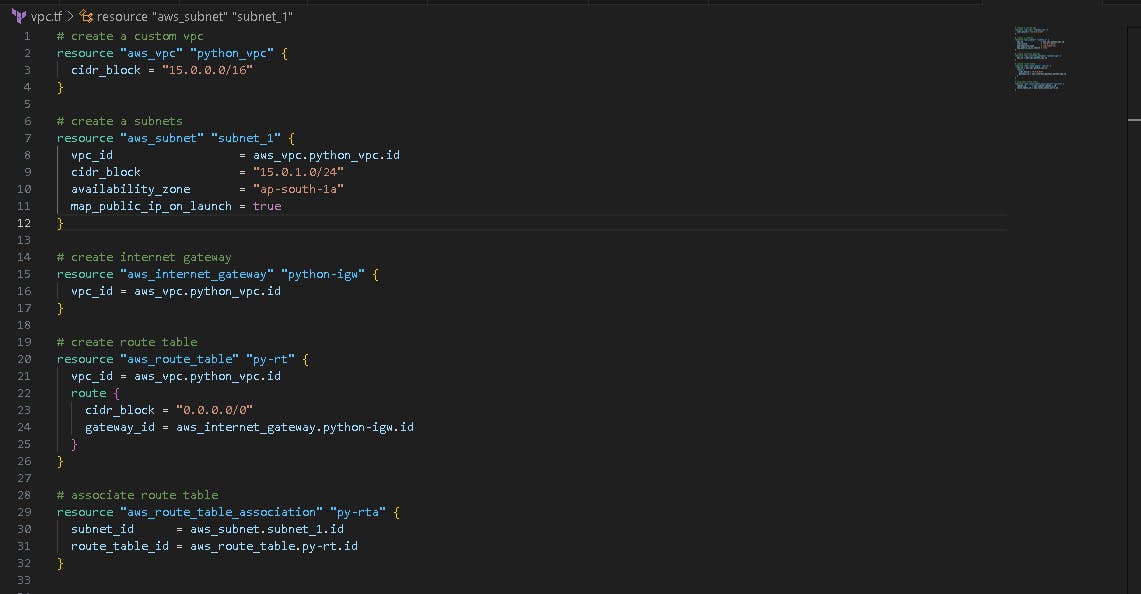

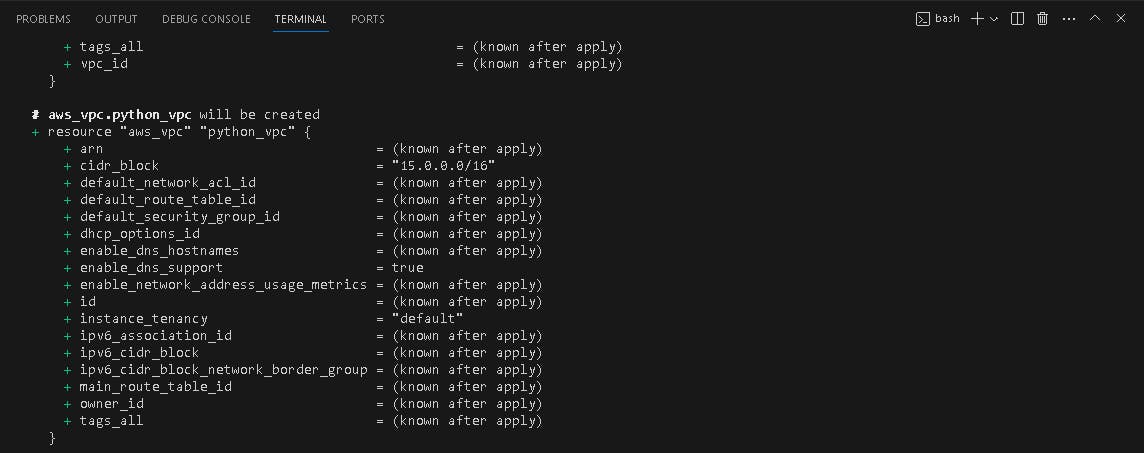

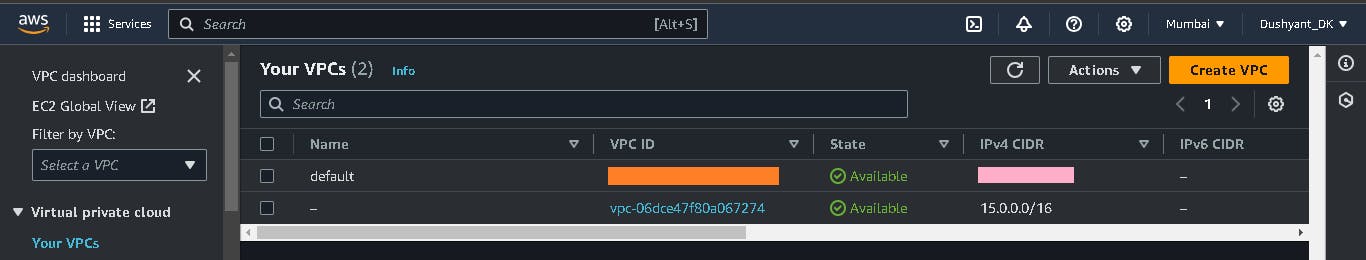

🤖 Create network vpc.tf file

Now, we want to create vpc.tf file.

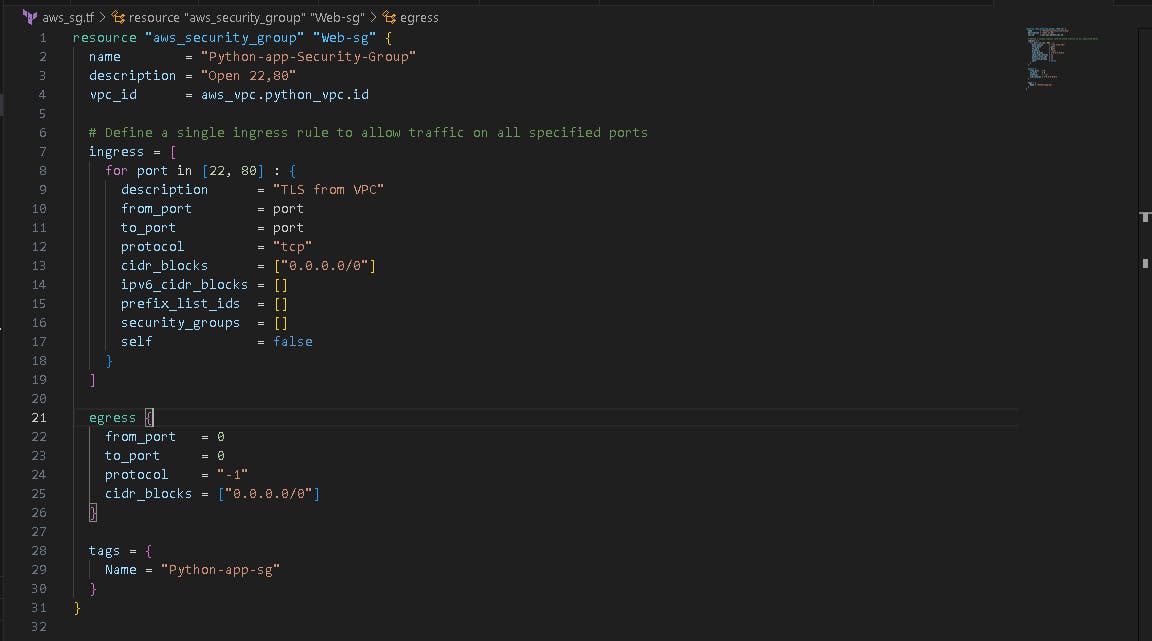

🤖 Create Security aws_sg.tf

Now, we want to create aws_sg.tf file.

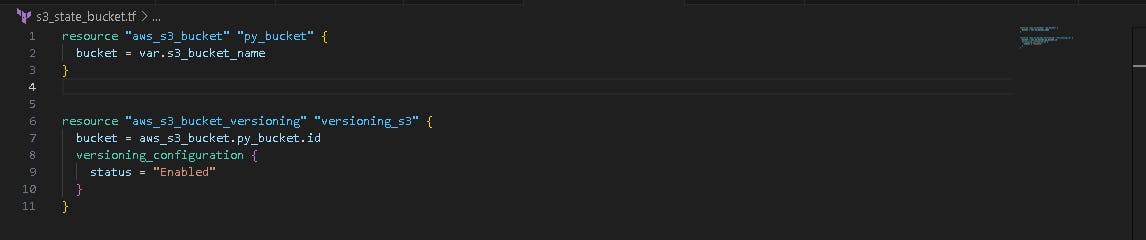

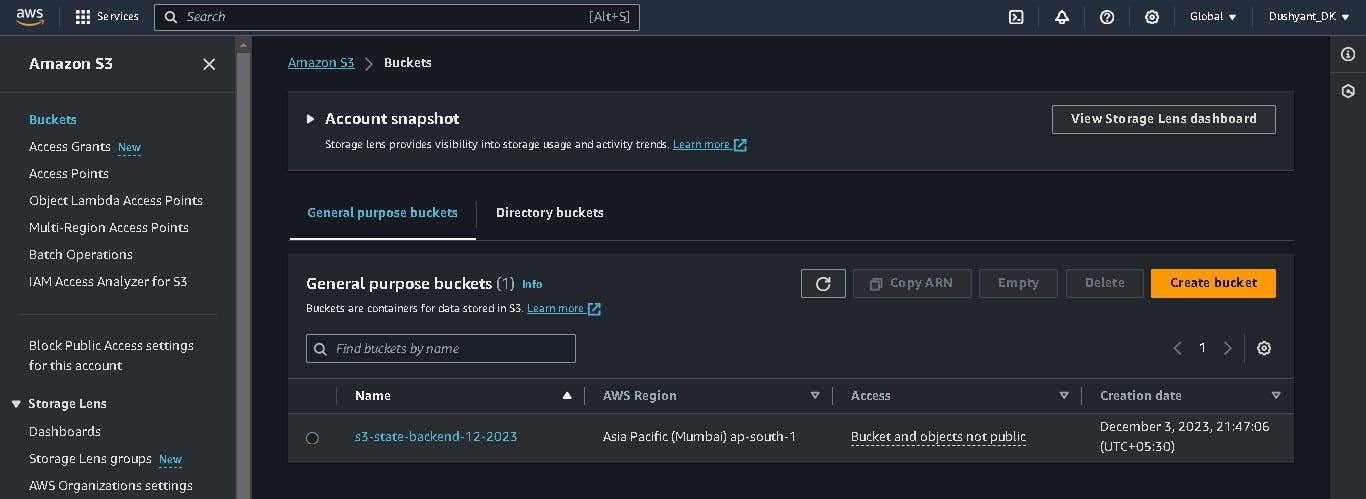

🤖 Create s3_state_bucket.tf file

Now, we want to create s3_state_bucket file for storing the terraform state file ( terraform.tfstate ).

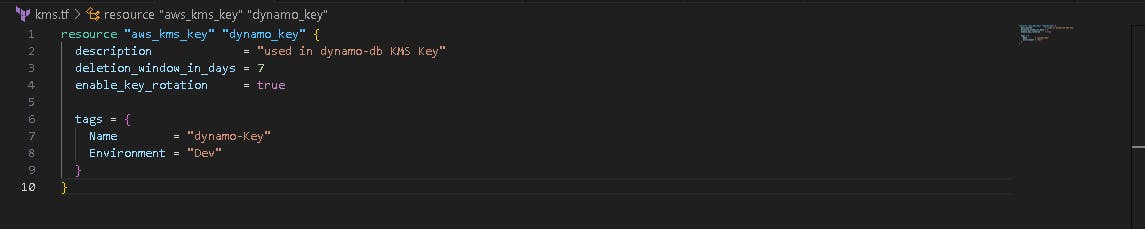

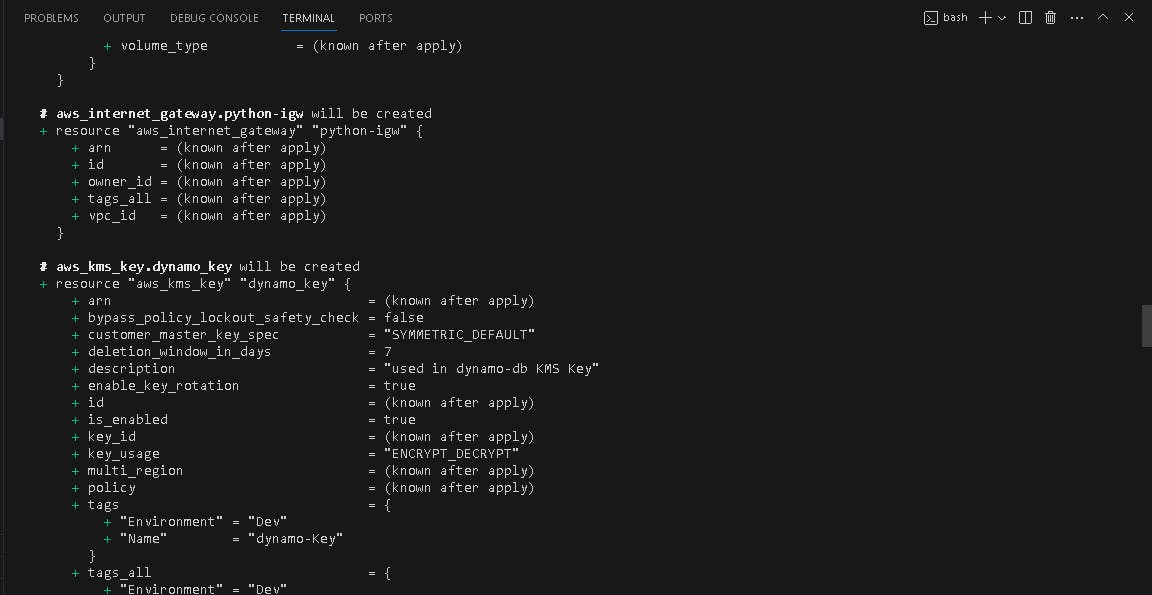

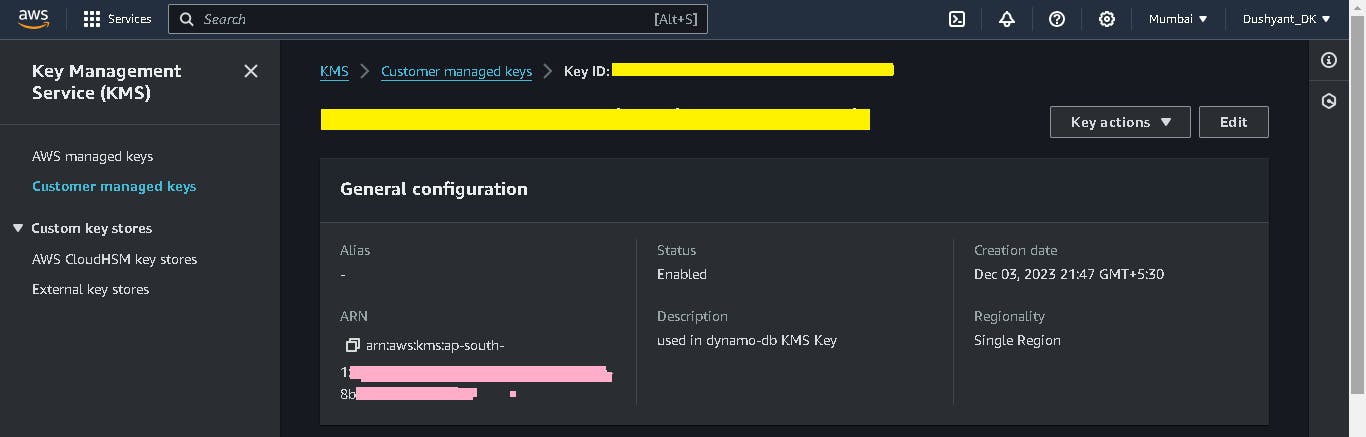

🤖 Create kms.tf file

Now, we want to create kms.tf file for encrypting the dynamo db table, with a key rotation facility in 7 days.

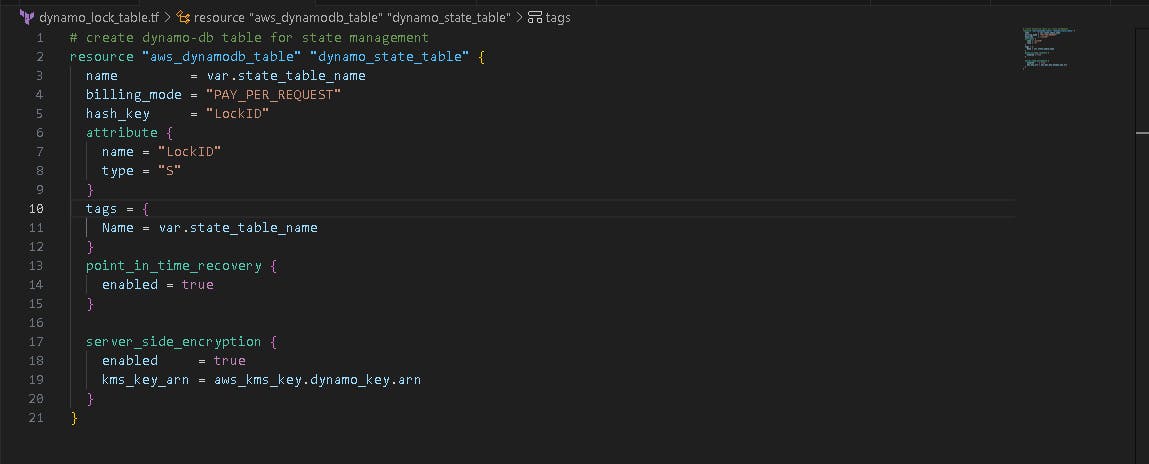

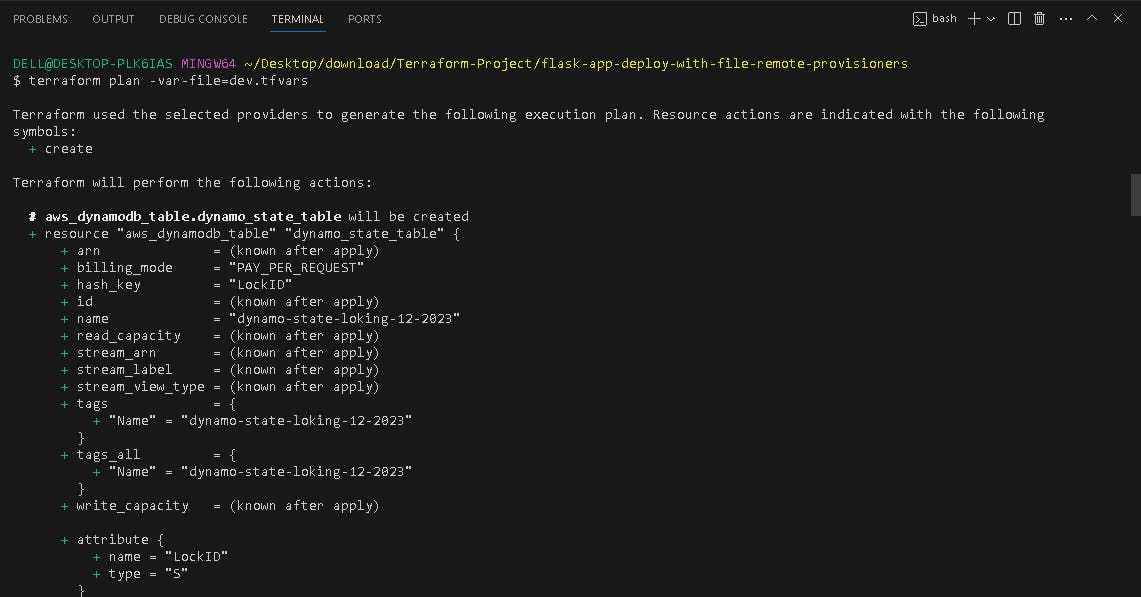

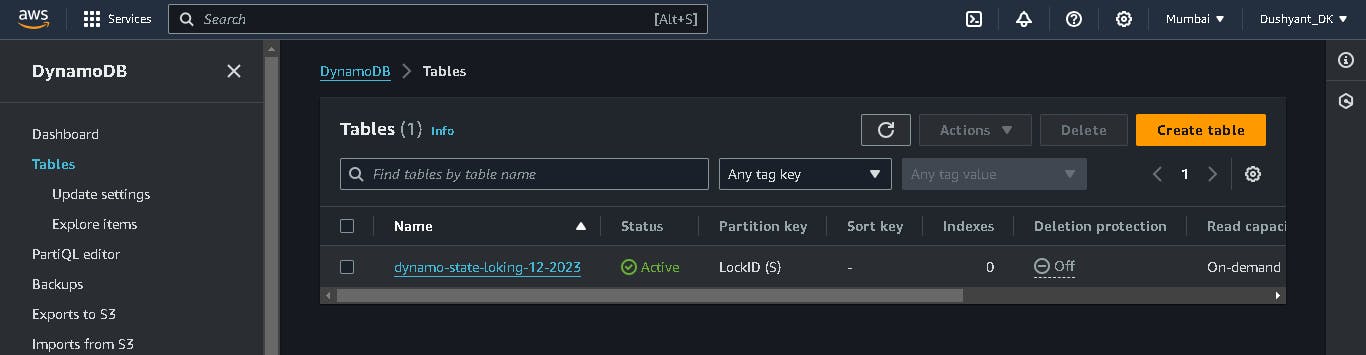

🤖 Create dynamo_lock_table.tf file

Now, we want to create dynamo_lock_table file, it creates a dynamo db table and locks the state. This prevents others from acquiring the lock and potentially corrupting your state

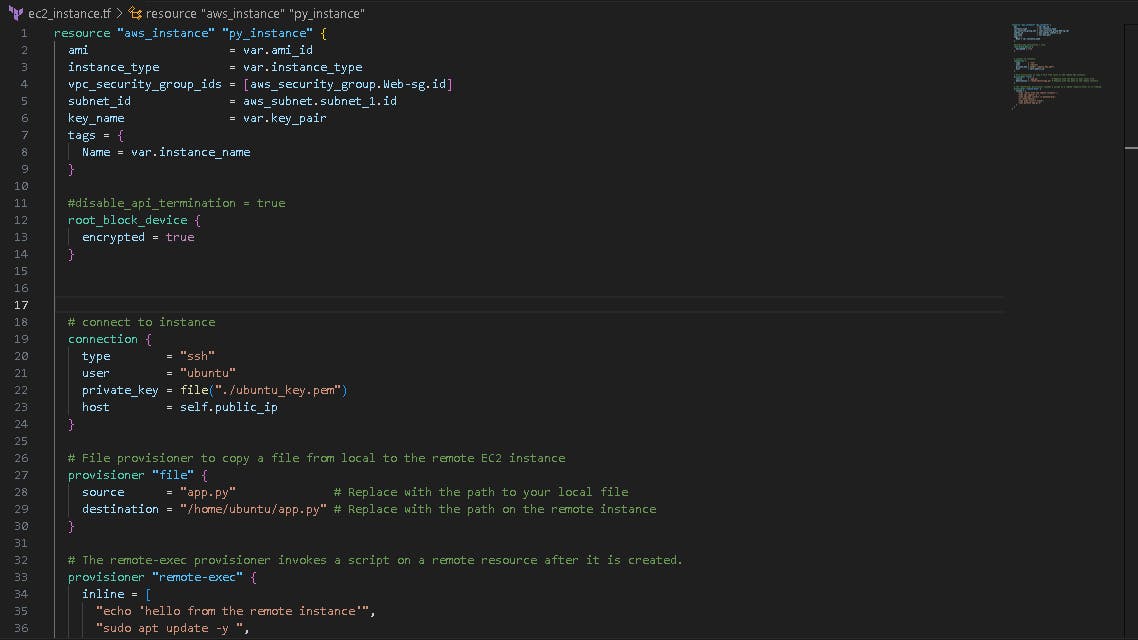

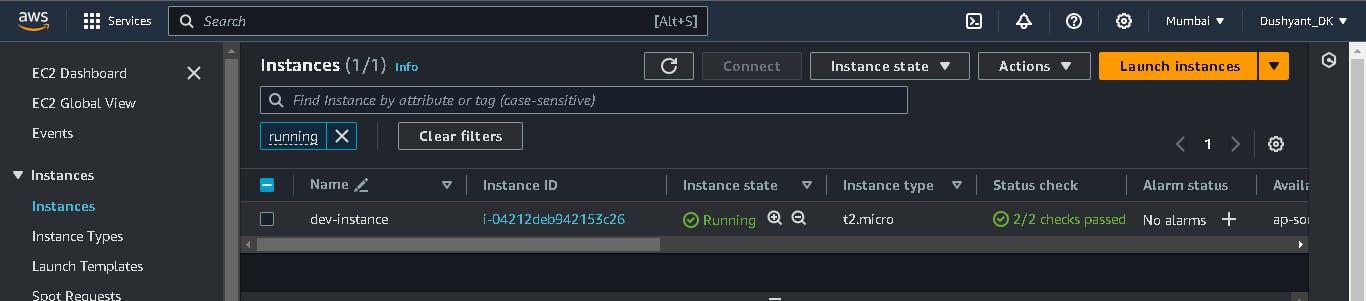

🤖 Create ec2_instance.tf file

Now, we create ec2_instance.tf file. Configuration file specifically designed for defining and provisioning AWS EC2 instances.

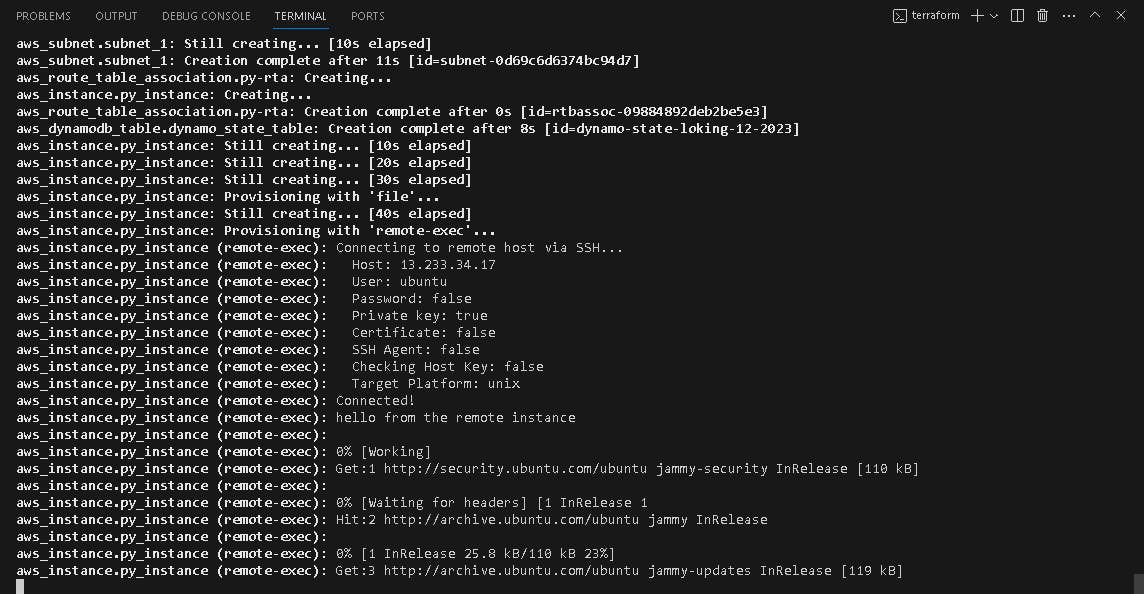

📲 Provisioners:

Provisioners allow us to configure resources after they are created. For instance, we may need to install software on an EC2 instance. Here's a simplified example of a provisioned:

The file provisioner is used to copy files or directories from the machine executing the terraform apply to the newly created resource.

remote-exec provisioner to run commands on the instance after it's created

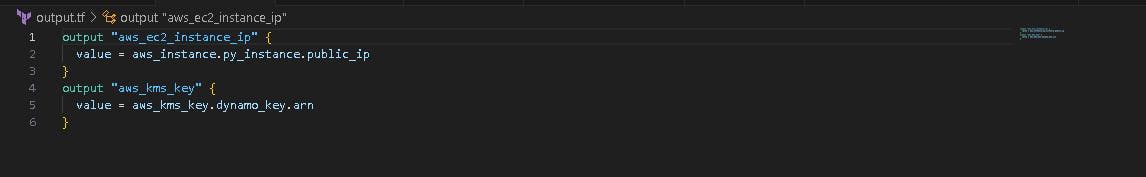

We want to see the output such as subnet ID, we will mention it in output.tf file.

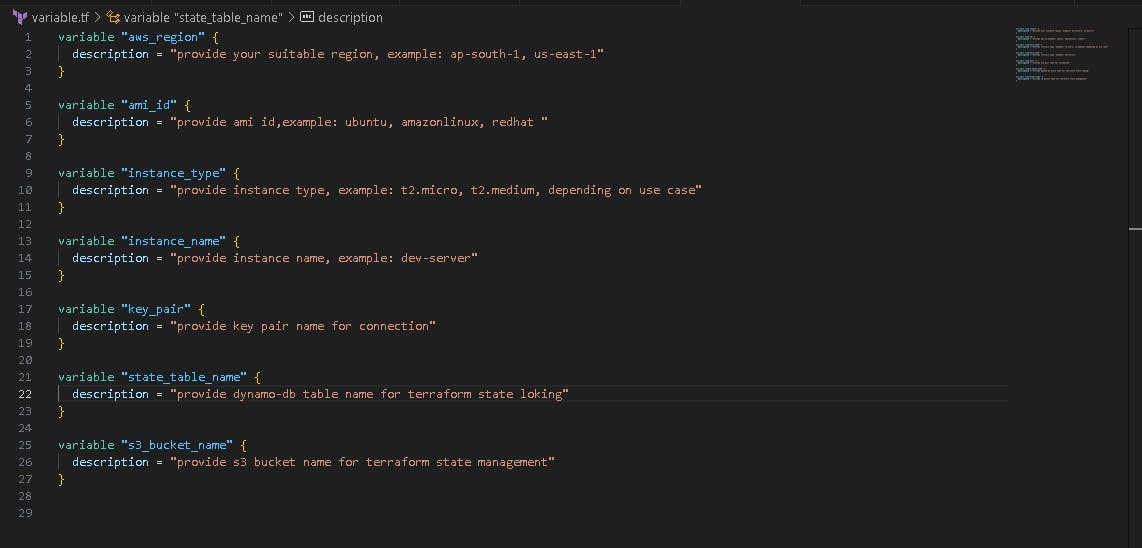

🤖 Create variable.tf & dev.tfvars file

Now, we create variable.tf and dev.tfvars file.

variable.tf for the declaration of variables, name, type, description, default values and additional meta data.

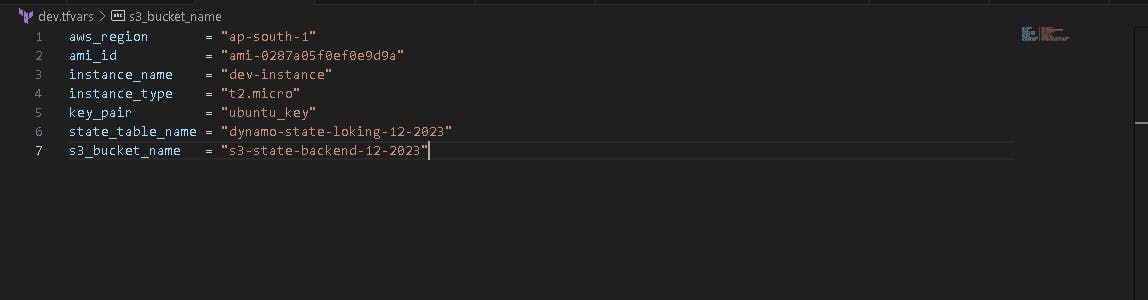

The default name is (terraform.tfvars), but I customise it to dev. tfvars is for giving the actual variable values during execution.

You must have to change dev.tfvars file values.

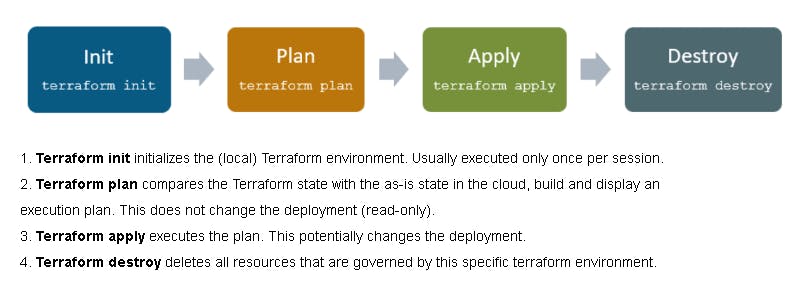

🎡 Terraform Life Cycle:

The terraform life cycle consists of INIT, PLAN, APPLY, DESTROY

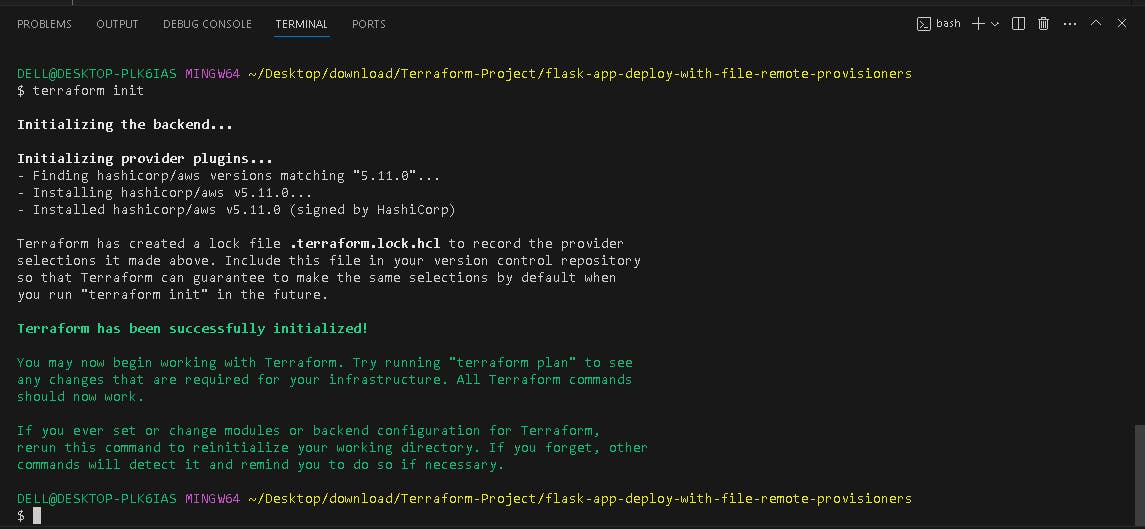

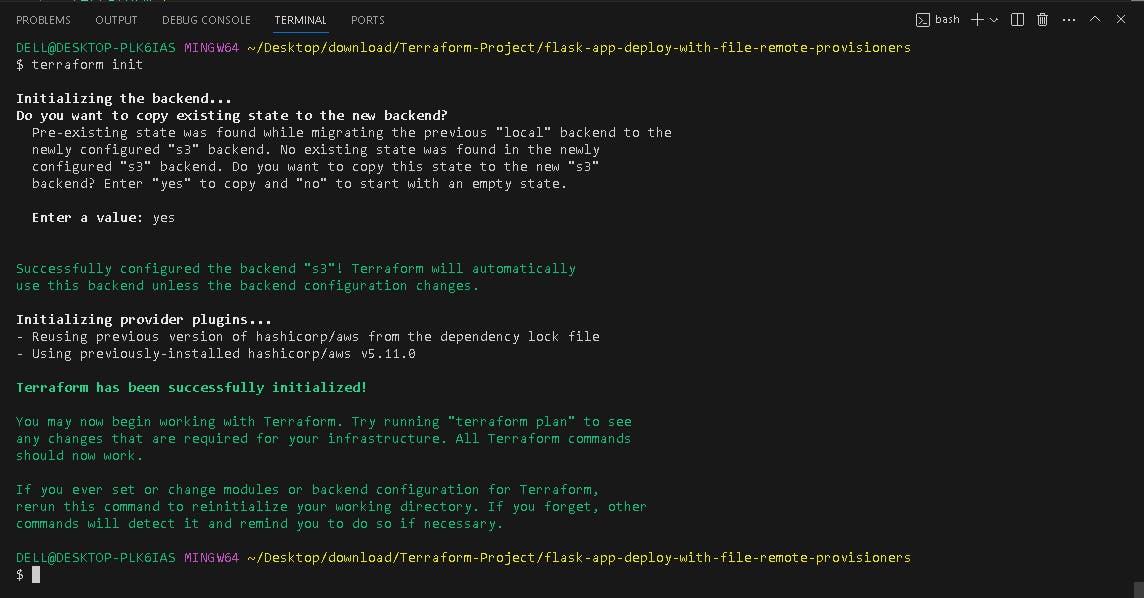

📟 Terraform INIT

terraform init

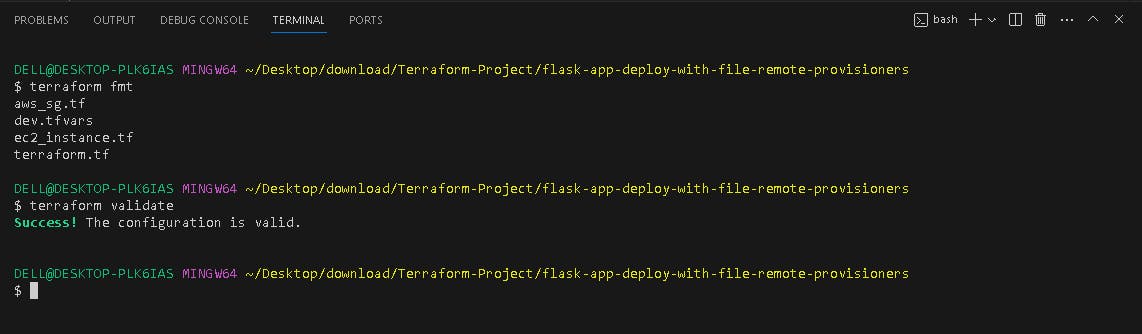

📺 Terraform VALIDATE & FMT

terraform fmt

terraform validate

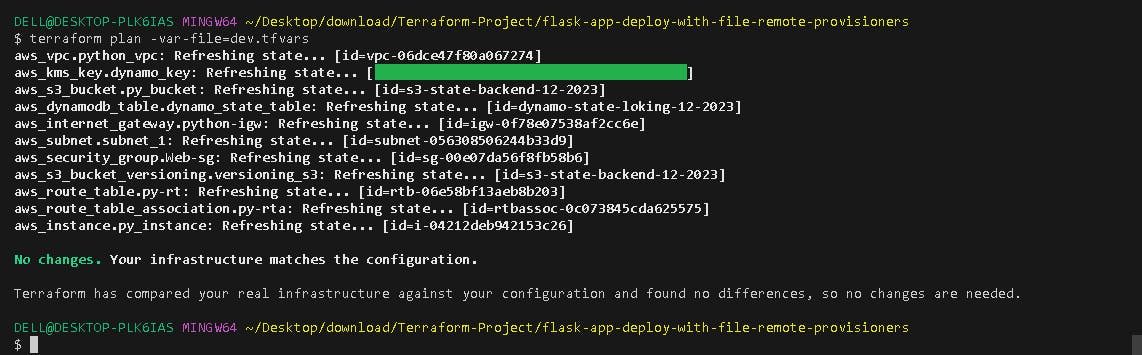

💡Terraform PLAN

terraform plan -var-file=dev.tfvars

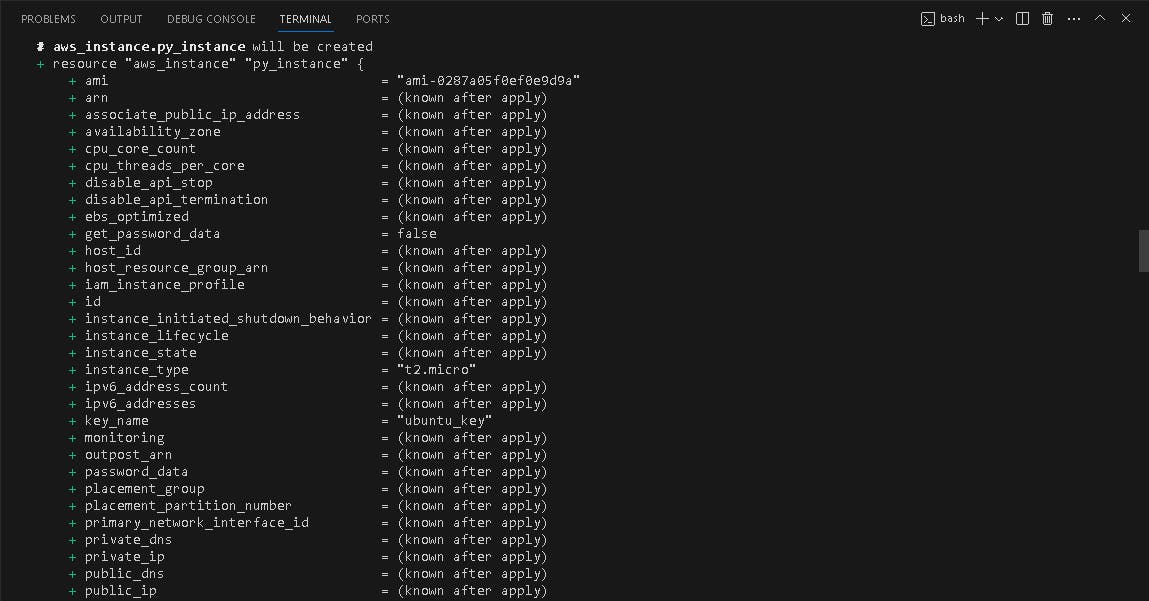

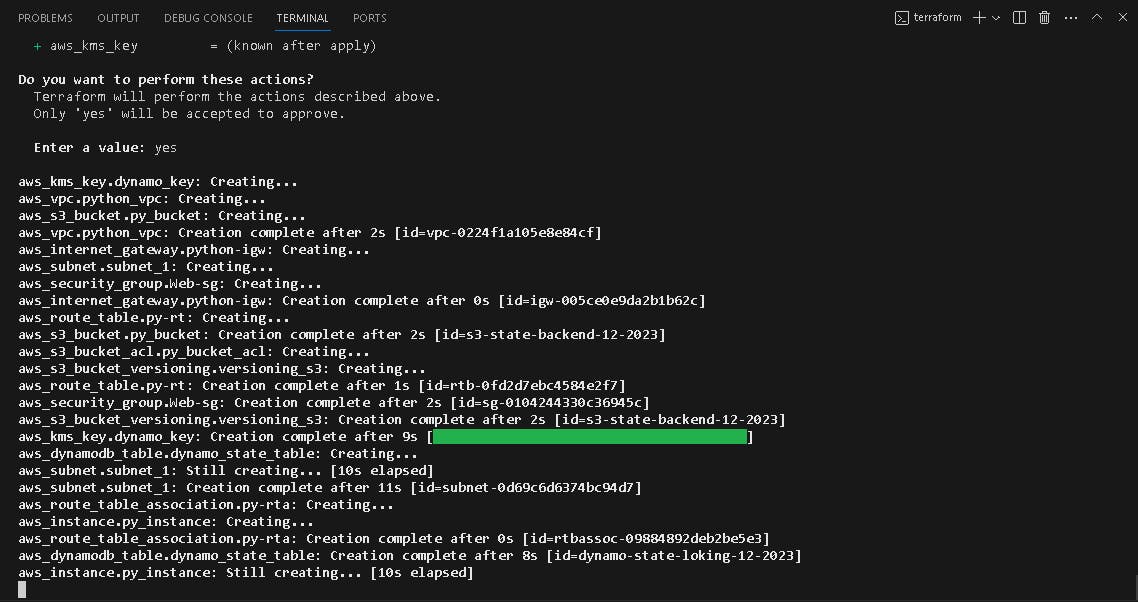

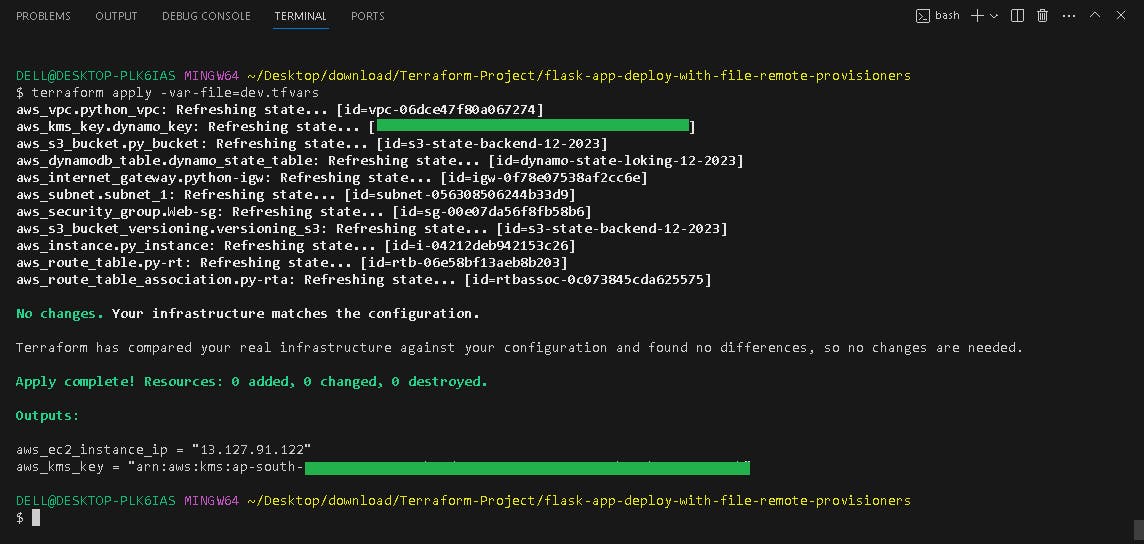

🎢 Terraform APPLY

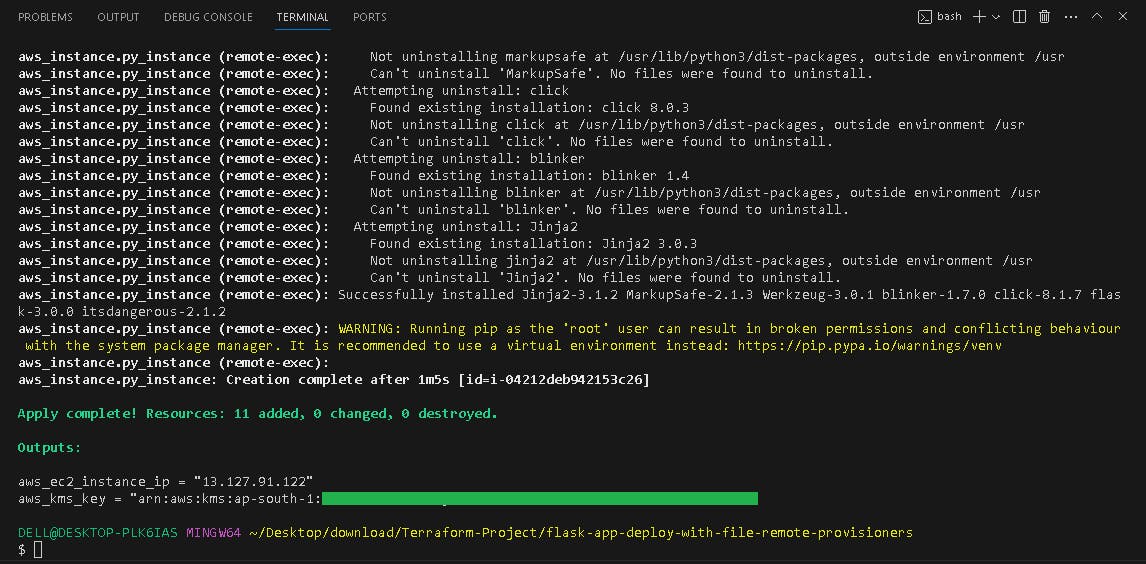

terraform apply -var-file=dev.tfvars

Resources Created

VPC created

Ec2 instance created

S3 bucket created

KMS created

Dynamodb table created

🔗Configure Backend:

Uncommit the backend block in terraform.tf file.

📟 Terraform INIT

terraform init

💡 Terraform PLAN

terraform plan -var-file=dev.tfvars

🎢 Terraform APPLY

terraform apply -var-file=dev.tfvars

If you check terraform.tfstate file which is present locally (in my case my Windows laptop) is empty. state move into s3 bucket.

If you want to check your state file use the below command. It pulls the state file from the s3 bucket.

terraform state pull

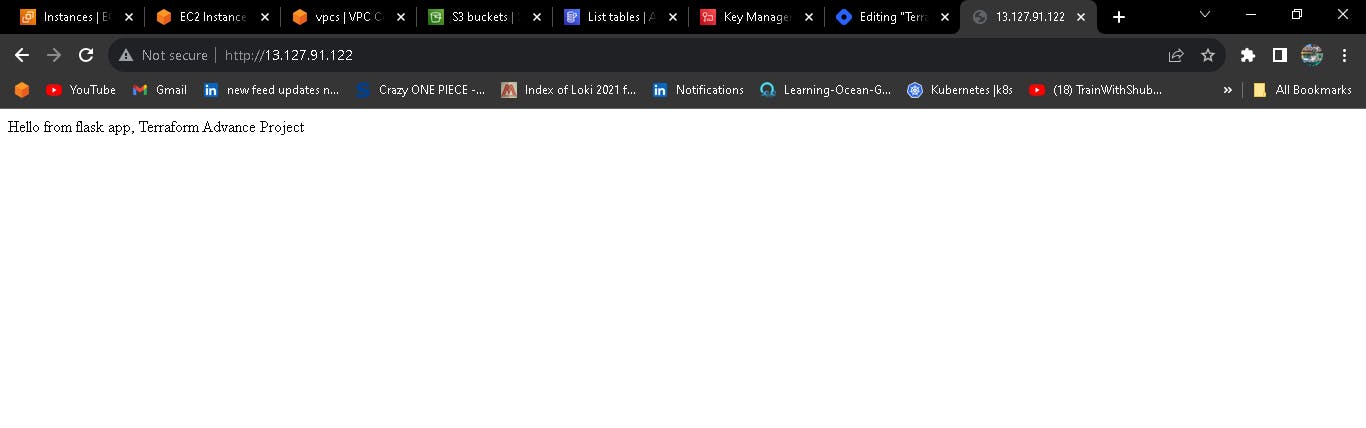

output of dev-server

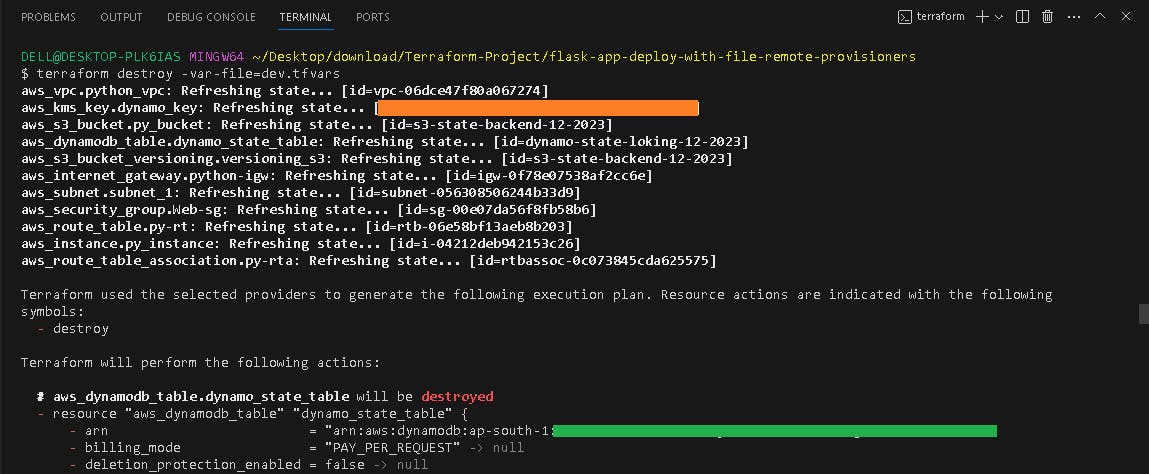

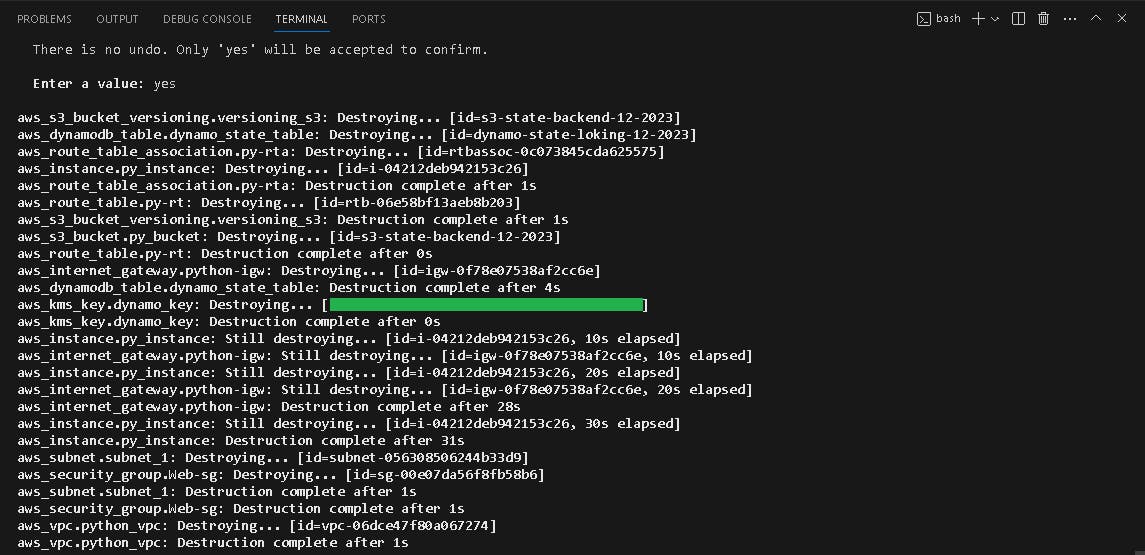

💣Terraform Destroy

To remove the resource, use:

terraform destroy -var-file=dev.tfvar

🎇 NOTE:

When you write a terraform file for your project, click on the extension, add snyk plugin and create your account in snyk. It automatically gives suggestions to overcome vulnerabilities.

The Snyk Visual Studio Code plugin scans and provides analysis of your code including open source dependencies and infrastructure as code configurations.

📦 Conclusion

Today, we've learned the importance of checking state files, validating configurations, adding provisioners for resource configuration, and state loking and remote backend. These are essential concepts when working with Terraform and AWS.

Remember, DevOps is all about continuous learning, so keep exploring and experimenting with Terraform to become the best DevOps engineer you can be.

\...................................................................................................................................................

The above information is up to my understanding. Suggestions are always welcome. Thanks for reading this article.😊

#aws #cloudcomputing #terraform #Devops #TrainWithShubham #90daysofdevopsc #happylearning

Follow for many such contents:

LinkedIn: linkedin.com/in/dushyant-kumar-dk

Blog: dushyantkumark.hashnode.dev

Github: https://github.com/dushyantkumark/terraform-advance-projects